Moisés Horta: “Decolonizing Artificial Intelligence”

Published 2 October 2020 by la rédaction

Makery met Moisés Horta in Linz during Ars Electronica, where he was performing an audiovisual live show at Kepler’s Gardens, mixing ancient and contemporary media in a multisensory experience of the symbiosis between AI and ancestral pre-hispanic technologies of the spirit. Later, he discussed neural networks in sound and visual arts.

Moisés Horta Valenzuela is a sound artist, technologist and electronic musician from Tijuana, México, working in the fields of computer music and the history and politics of emerging technologies. Makery had an interesting conversation with him after his talk at Ars Electronica’s “Artificial Stupidity” conference on September 13. From Music & AI to deep fakes. Interview.

What is your background?

My name is Moisés Horta. I come from Tijuana, Mexico, but now I reside in Berlin. I’ve been working as a self-taught electronic musician. For many years, I was part of the Ruidosón scene on the border between Mexico and the United States (if you want to know more about this movement, there is a book, also in PDF).

Ruidosón is a sound that friends and myself were developing back in 2009. We were trying to make a unique sound that came from the border, in response to the idea of globalization. I co-founded a band called Los Macuanos in which I was active for about six years. We were sampling pre-hispanic music, we were flipping it, making a really dark version of it, while also putting a lot of political intention in it. At that time, Tijuana was overwhelmed with violence, but we were organizing parties.

After that, I moved to Mexico City, where I started to focus more on art and technology. I was exploring the idea of identity: What do national identities mean in a global context? I was criticizing the idea of globalization—when we talk about globalization, we are actually talking about the cultural hegemony of the United States, Europe and the Global North, or at least Western ideas. This critical approach has always been part of my theme. In Mexico City I started developing sound art installations.

Then in 2016 I moved to Europe and met the Danish sound artist Goodiepal. He was working on radical computer music and talking a lot about Artificial Intelligence in a speculative but critical way. It was my first introduction to neural networks, and I was approaching this with a very critical perspective, because I was very influenced by his work. Then I moved to Berlin, where I continued to work on new computer music and sound art, while always incorporating this criticism of globalization, identity, etc.

Tell us more about your project Hexorcismos.

Hexorcismos grew out as a side project. It started in 2013 when I was still living in Mexico City. It’s a musical project which I used to explore the relationship between electronic music composition and sound art, and as a way to present a more abstract way of incorporating themes in music. I was interested in Sonido 13, a theory on microtonal music from the early 20th century, I thought this idea could be moved forward. Hexorcismos is an experimental project, that lets me more freely explore, by not necessarily making sound art or electronic dance music.

In 2013, I did a project on sound art archival research in Mexico City, organized by the head of the NAAFI label. We had to work with the archives of the Fonoteca Nacional, resampling and reinterpreting the archive material. So I explored the works of electronic music pioneers in Mexico, such as Antonio Russek, Hector Quintanar and others. I found it interesting to uncover all the heritage of early electronic and experimental music in Latin America.

What was your performance at Ars Electronica about?

Neltokoni in Cuícatl, my performance at Ars Electronica this year, is a more recent work. It was made with a new team, and it’s about generative AI systems and neural networks. It’s part of the project relating to the album I released in May, called Transfiguracion. I worked with raw audio synthesis. There are some AI systems that work with MIDI, but it’s constrained by design to 12 tones, so it is conforming to a specific epistemology of sound. I prefer working with raw audio synthesis, which is not so limited.

I’m interested in uncovering what these technologies can do for music-making, what are their specificities, what can happen only when you use these systems. The failures of the algorithm, the glitches, are a very important part, as a conceptual practice. It challenges the idea of perfection of the AI systems, which some claim make perfect sound and match your need exactly, as with other new technologies. You know, when synthesizers or the MPC, for example, were released, in the beginning people were trying to replicate existing musical styles with these technologies, but then new kinds of music emerged as soon as people started abusing the technology’s original design, using it in a way that was not intended. It’s also what always drives my practice of instruments or electronics or installations. I am very interested in the hacking approach to music-making.

In this performance, I used an AI system that performed what is called “style transfer”—it changes styles of music, morphing from one style to another. I used Antonio Zepeda’s “Templo Mayor” album released in 1980 as an audio source. This was already an experimental music album. I wanted to hear what the AI system would do with these pre-hispanic sounds morphed with my own corpus of music from the past five years, not in a perspective of purity, but with the idea of creating a lineage, a continuum. The traces of pre-hispanic instruments had been lost through time, through the process of colonization. So it’s an effort to create a bridge between ancient ways of making music and contemporary electronic music.

The general impetus is to decolonize Artificial Intelligence. This technology has so many embedded biases. When you work with it, you see what it is made of. We could almost call it Artificial Stupidity. These technologies are just as good as the data you pour into it, and they have the biases of the persons who made them. So, I’m also trying to demystify these ideas of AI as something that could save humanity, or could create this protective way between everybody. By using AI’s generative capabilities in sound, it’s interesting to point out that models were made with Western music in mind, and what happens when glitching them, creating unexpected panic in them.

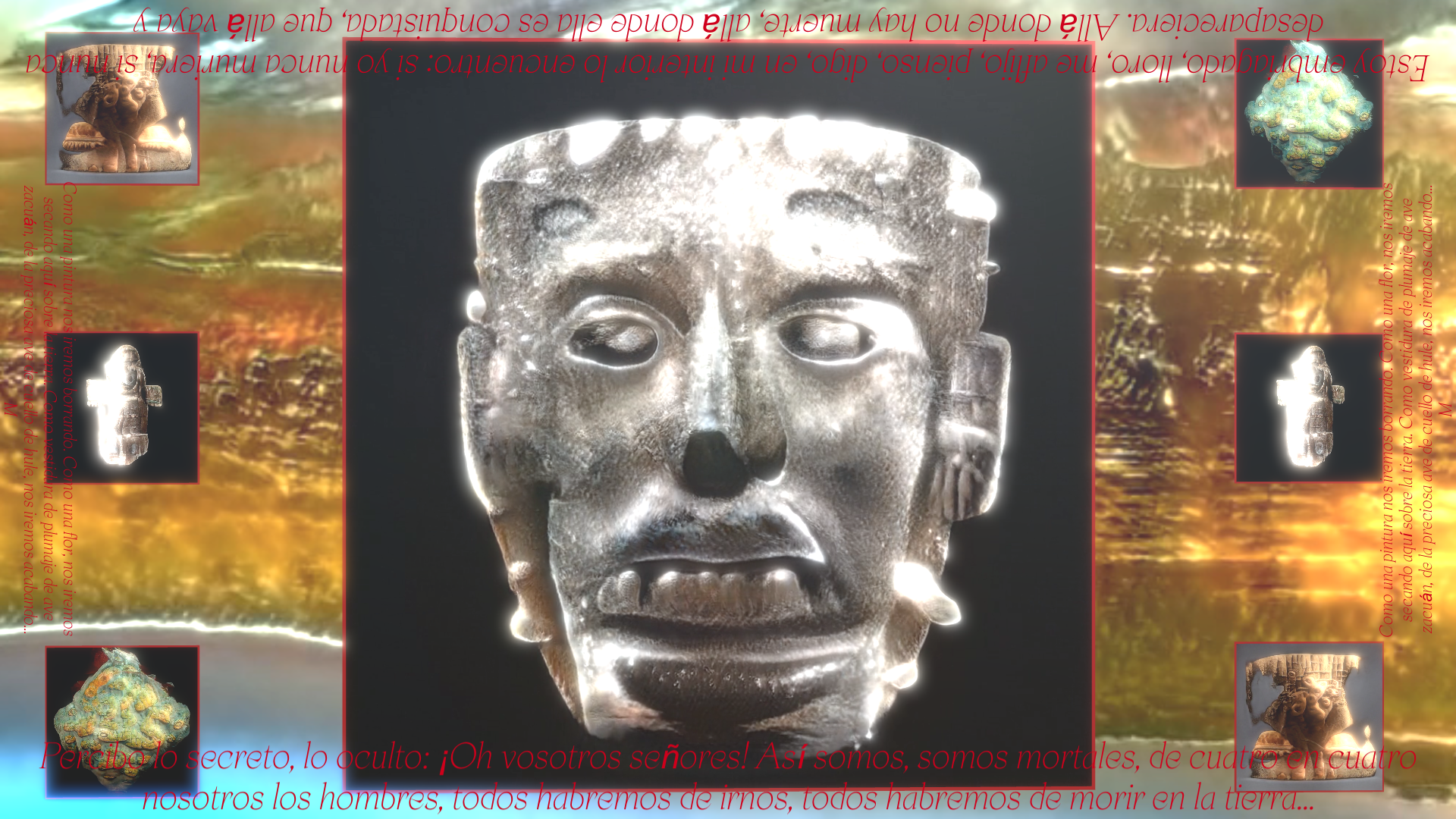

The performance for Ars Electronica, Neltokoni in Cuícatl, uses three AI generative systems. One uses the GPT-2 model for text generation, which has been trained with poetry in ancient Nahuatl, a language largely used in pre-hispanic Mexico, but which is still alive. This model can be trained by any language, even a non-alphabetical one like emojis or icons. Based on the data, it looks at the patterns and tries to predict what will be the next character. Initially the model was trained in English, of course. There were not many available datasets in Nahuatl—which also raises another interesting question: How do we bring these epistemologies into the far future?

For the images, I essentially scanned Instagram for pictures of heritage artifacts and talismans from Mesoamerica. I found this interesting because it was like re-appropriating images from a surveillance capitalist platform (Facebook), and giving them a new life. It’s a way to resist, taking back these artifacts and bringing them to life in a performance. The metaphorical idea for this performance is that these artifacts, these talismans – “Neltokoni” means “talisman” in Nahuatl and “in Cuícatl” means “in poetry” – are speaking in form of song of the process of how they became objectified and presented in the West. The last object speaking in the performance is Moctezuma’s headdress, which, coincidentially is in Austria, Vienna, to be precise. They are speaking of the colonial process and I see this as a subversive act because it makes them alive again, the culture and the knowledge, and not dead and exhibited in a museum. Usually in Nahuatl, you say “in Xochitl, in Cuícatl” which means “in flowers, and in song”. It’s kind of a way to make them tell a story, in a metaphorical way, to make them talk. In the performance the visual is driving the sound, so the pictures are speaking by themselves, they are transfigured into sound, it’s a visual sound approach.

The visuals generative themselves, they are generated by a model called StyleGAN2 developed by NVIDIA. Once I trained the model with a thousand images, it created a “latent space”, which can synthesize and morph through images via patterns it recognizes within the dataset. In the performance, this morphing was the sound composition itself.

I see AI as a new tool that can leverage new skills. For example, I am not a very good visual artist, and it helped me to create new material for visuals. Actually these AI systems, like GPT-2 or StyleGAN, are very narrow artificial intelligences, they are really good at specific things, compared to this conceptual idea of a general-purpose AI that supposedly could do anything. I am very doubtful if this can happen. These technologies are artificially stupid, because they can just do one thing.

In the book Nooscope by Vladan Joler and Matteo Pasquinelli, the authors demystify the technology, I really like how they explain it. They make a nice comparison, basically that AI is to the mind and information, what microscopes and telescopes are to the eye, i.e. tools that open to new worlds and modes of perception. For example, AI reduces human-time by analyzing these data—what we don’t have time to see, because it’s too much data. It is a mundane way to think about these technologies, because they are really stupid, they don’t suggest anything, but they are really good for finding patterns within huge amounts of data. They create a kind of feedback loop, like you get something from the output of the system, you can work on it and then re-inject the results into the system.

You also investigated the field of deep fakes?

I worked with an algorithm called Wav2Lip. It does lip-syncing. You put in a video of a person talking or whatever, then an audio track, preferably of a voice talking, and automatically you get the video of this person lip-synching the audio. It was originally designed to overdub movies. Obviously you can do funny things with it, but also malicious stuff. For me, in the context of Latin America and in the Global South, the question is more that people need to be aware that these technologies exist and are very accessible. I worked on a deep fake project with a critical approach to this technology, a pedagogical approach. I made this video on deep fakes, and then people came to me and said “Ah Ok, so you don’t need to be a super video producer to do this, you just push a button and it’s really convincing.” It’s kind of educational, but I also made it funny… We see this technology being used more and more by right-wing trolls. It started with porn, replacing faces in porn videos, so it needs to grow out of that very macho scene. I also work with voice-cloning technology, and combining the two techniques can potentially do huge damage. It can be really “real”, especially if you don’t know these technologies exist.

I want to have another approach, which is funnier and more playful. I’m excited with the idea of synthetic media, which is the proper term to call all the generative AI outputs. There will be a point in history where everything we will see on the Internet, we will not be able to say if it has been made by a person or by an AI. That is the real danger. There is already a channel where you can see two little bots having a conversation, responding to each other using only GPT-2. It’s scary, but also funny and harmless. With these systems you can create huge amounts of data, and soon nobody could say if it’s real or not.

This is what I’m interested in now, raising questions about these technologies. I’m not specifically addressing the art community, because I know they are already aware of these kind of things, I would prefer reaching people who are maybe not familiar with these technologies, a more general public.

View this post on Instagram@hexorcismos el amlo que queremos pero nunca vamos a tener #fuckthepolice #acab

A post shared by ? (@mexicoissocheap) on

What are your next upcoming projects?

I will participate in the Transart festival in Italy, performing my album “Transfiguracion”. I also have a project still in progress, entitled “The New Pavlovian Bells”, in which I want to explore the effects of notifications from Facebook, Twitter, etc. All these platforms were made with guidelines from behavioral psychology. Like in the experiences of Pavlov, when the dog heard bells he started salivating, now we have all these bells engaging us to do something. I want to explore a bit what it does to our brains, emotions and mental health. I also give introductory workshops in machine learning for musicians, sound artists and the general public. The next round will start in late-October/early-November.

Follow Moisés Horta: Website, Instagram, Twitter

Listen to Hexorcismos on Bandcamp