A symposium takes aim at the moving target of AI

Published 18 December 2018 by Cherise Fong

The 1st Japanese-German-French symposium on research, applications and issues surrounding artificial intelligence was held in Tokyo on November 21-22, 2018.

Tokyo, from our correspondent

“It’s also important to talk about artificial intelligence in order to dedramatize it,” advances Cédric Villani, the charismatic French mathematician and politician with the silver spider brooch, in his presentation “Artificial Intelligence for Humanity”. “The word ‘intelligence’ is scary. Maybe instead we should say ‘optimization’ or ‘correlation’… It’s not as scary, but it’s also not as sexy.”

It’s a recurring theme of these two days of talks and discussions during the first trilateral and multidisciplinary symposium on artificial intelligence (AI), organized by the German Centre for Research and Innovation (DWIH) Tokyo in partnership with the Embassy of France in Japan: How can we use AI to improve our quality of life and society as a whole?

Experts in artificial intelligence, as well as researchers in technological and social sciences from Japanese, German and French institutions, gathered at Toranomon Hills in Tokyo in an effort to sort out this emerging field of study, perhaps just as misunderstood as it is polarizing.

Opening the second day of the symposium, Wolfram Burgard, from the University of Freiburg, listed a few idyllic scenarios benefiting from the contributions of AI: fewer road accidents, optimized and accessible healthcare, sustainable agriculture to feed the whole planet… among other societal solutions on a global scale. In counterpoint, Villani reminded us that potential scenarios range from “extraordinarily beautiful to extraordinarily ugly”: bankruptcy, war… the unspeakable. Or precisely what is so scary about the word “intelligence”.

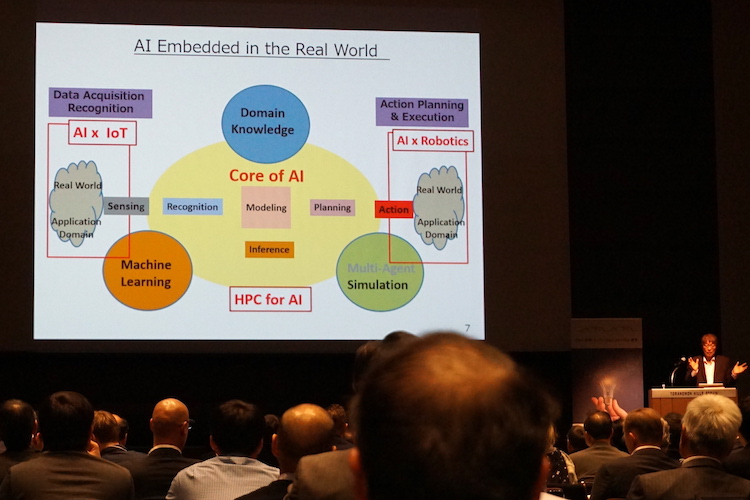

Daniel Andler, a former mathematician who now specializes in cognitive sciences at the Sorbonne University in Paris, tellingly titled his presentation: “The Crucial Importance of Understanding AI”. He emphasized that AI is a moving target, an engineering project where we don’t agree on what it means or where it’s going. If artificial intelligence is an ersatz of human intelligence, he says, should we focus on building specific tools for localized tasks or make it more flexible and universal to adapt to unlimited contexts? To go deeper into AI, with all its pragmatic and ethical issues, insists Andler, it is essential to study cognitive sciences, as well as social sciences such as sociology and anthropology.

Happiness and cobotics

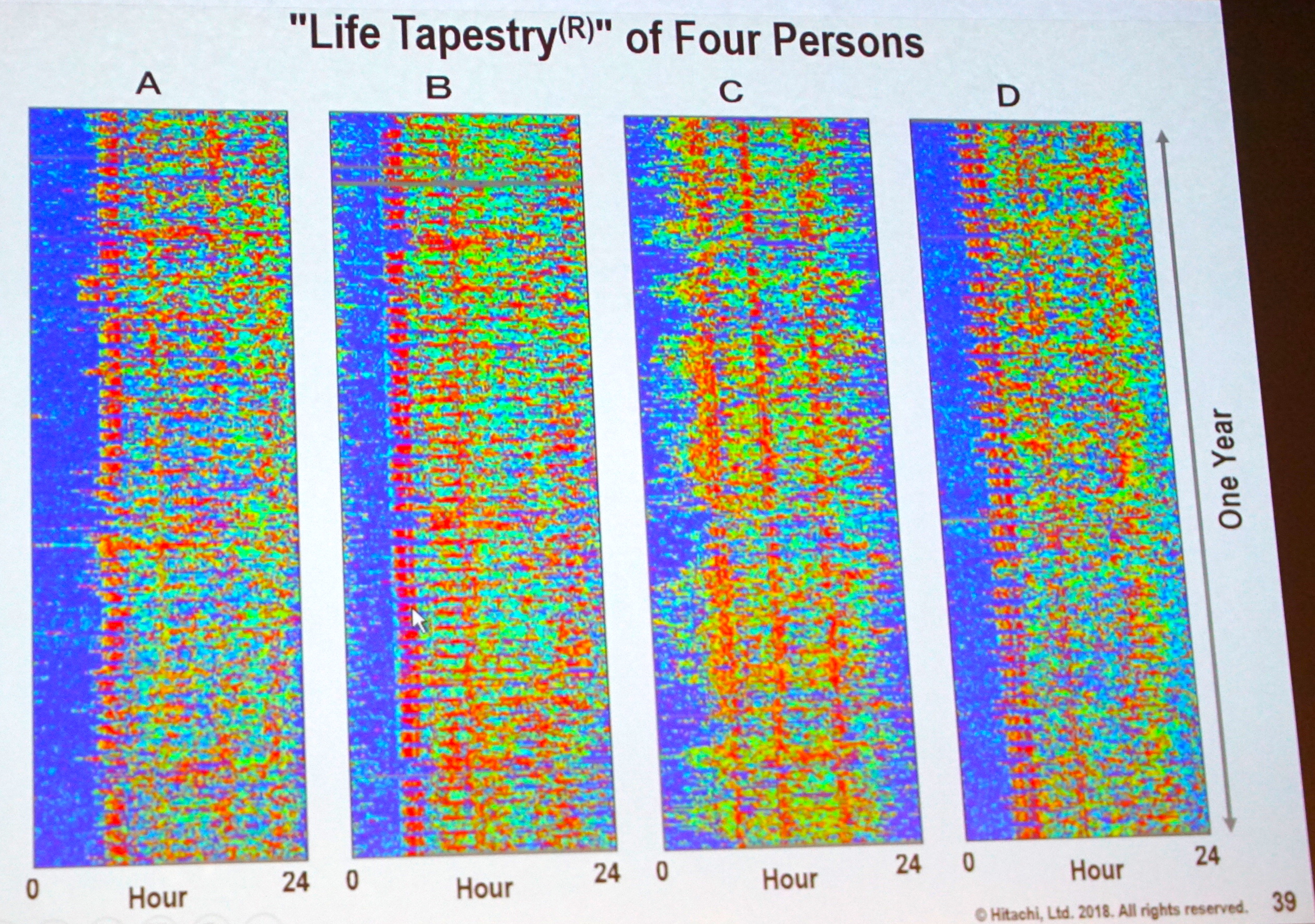

Returning to the primary applications of AI—optimization and efficiency—Kazuo Yano of Hitachi advocated the correlation between happiness and productivity, as he claims is evidenced by analyses of people’s physical activity over time. In short, the more people are intentionally and physically active, the happier they are, and the more productive they become.

His presentation opened with a robot learning to swing, which progressively learns to master gymnastics. Its autonomous learning by the process of trial and error turned out to be as emotionally moving as the hard-earned success of a child who finally prevails through blood, sweat and tears. In the absence of rules, only the result counts. “The ultimate goal of learning is happiness,” Yano concluded.

But what about the hypothetical Singularity, when machine intelligence merges with, or even surpasses, human intelligence? During the plenary session on the “New working environment with AI”, speakers talked less about convergence than peaceful coexistence and symbiotic collaboration between humans and the technology that serves them.

Matthias Peissner, from the Fraunhofer Institute for Industrial Engineering IAO, affirmed that work is about social participation. Referencing the self-determination theory of human motivation, he emphasized the importance of preserving the human feelings of competency, autonomy and relativeness, by creating opportunities for people and machines to learn together, in symbiosis (cobotics).

Cities: intelligent, inclusive, sustainable

From a social point of view, AI is often linked to the 4th Industrial Revolution, where the optimization of productivity and urban mobility in the Internet of Things is often too easily reduced to a buzzword: “smart city”.

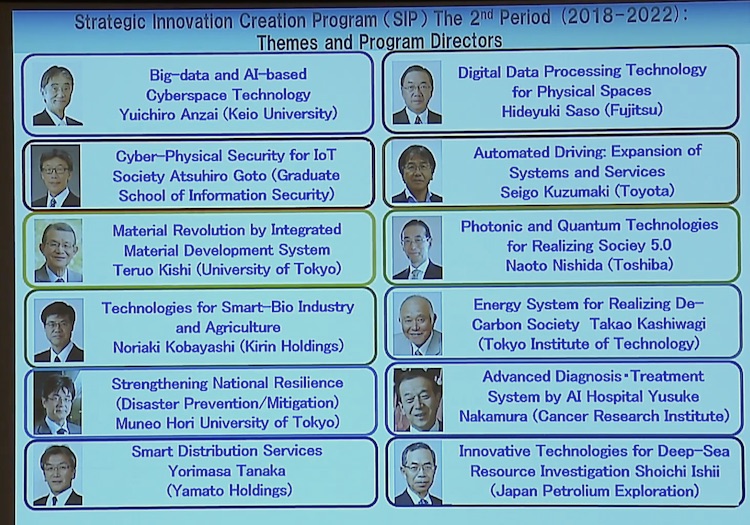

And yet, Yuichiro Anzai, president of the Japan Society for the Promotion of Science, remarked that this use of the word “smart” is more reminiscent of science-fiction from the 1960s and ’70s. According to Anzai, the definition of the present and future smart city isn’t necessarily fully automated, but rather sustainable and inclusive.

Speaking of which, inclusiveness as a principle would have been significantly more convincing had it been applied across the board… Anzai proudly displayed the 12 directors of the Japanese government’s Strategic Innovation Program, only to reveal that all 12 of them are male. Of course, the problem of gender monopoly in government, corporate, academics and society lies much deeper. But this sobering statistic was unfortunately echoed in the organization of this symposium, where 12 out of 12 AI experts invited to speak on the main stage, including the two moderators, were men.

If in developing countries AI is more oriented toward universal access (as in the case of medical treatment), in richer countries, the notions of AI combined with big data, furthermore associated with the smart city, are soon confronted with the problems of security and privacy.

During the break-out session dedicated to the smart city, an anthropologist participant pointed out that the definition of “smart” seemed to change with each presentation: What are the metrics of a “smart” city?

Amélie Cordier, Chief Scientific Officer of Hoomano and founder of the French collective LYON-iS-Ai, was unambiguous in her response: environmental impact.

Hideaki Takagi, from the Economic Affairs Bureau of the City of Yokohama, added to the ecological quality of a smart city the quality of life of its residents: inclusiveness of diverse lifestyles; access to individual happiness. He highlighted the need to state a clear value of the smart city for all its citizens. For example, no crime—but at the expense of individual privacy?

Ethics and emotions

From an almost philosophical perspective, one of the parallel break-out sessions focused on the ethical and legal aspects of AI. According to Arisa Ema, researcher in alternative policies at the University of Tokyo, artificial intelligence is a mirror of our society; AI researchers have a social responsibility to ensure the ethical and unprejudiced behavior of their algorithms and bots. “It’s a conceptual investigation that would particularly benefit from international and interdisciplinary cooperation,” she said.

Already in 2017 and 2018, the Japanese Society for Artificial Intelligence drafted ethical guidelines for artificial intelligence R&D and Utilization Principles. They include collaboration, transparency, security, quality of data, respect for privacy, but also social responsibility, human dignity and contribution to humanity.

Ethical issues get more complicated when considering the legal status of artificially intelligent agents. Once intelligent machines become equally emotive and autonomous, can they effectively be considered as legal persons?

Laurence Devilliers, AI researcher at the Sorbonne University and CNRS in Paris, showed several examples of affective computing in robots, referencing Plutchik’s wheel of emotions to categorize human emotions. But robots such as Nao, who is capable of analyzing emotions in human voices, as well as other disembodied chatbots and emobots, only give the illusion of human intelligence, she reminded us.

Even an internationally glorified robot like Sophia spits out prescripted dialogues along with mimetic facial expressions to seduce the masses; for better or worse, it’s a well-trained puppet on which we project our fantasies. Devilliers deplored the misinformation that surrounds AI, while pointing out in passing that the vast majority of “emotive” robots have female bodies and were designed by men. “Empathy is impossible in robots,” she concluded. “They have no emotional intelligence.”

Christoph von des Malsburg, from the Frankfurt Institute for Advanced Studies, disagreed. He countered that from a behavioral perspective, the machines of the future will have emotions. He predicts that we will eventually be able to initialize AI with the mental capacity of a 3-year-old child, for example. Then it will be a question of restraining its field of behavior and defining a code of ethics.

Jan-Erik Schirmer, researcher at Humboldt University of Berlin and the author of a paper on AI and legal personality, proposes to consider artificial agents, not according to their capacity or behavior, but from a social sciences perspective. If Sophia is a de facto Saudi citizen and Paro the original seal robot is officially registered as a family member of its Japanese creator, new AI agents deserve their own status, if only to avoid the thorny moral issues of humanizing them before the law.

Schirmer introduces the German term Teilrechtsfähigkeit, or partial legal capacity, which designates a particular status between object and subject. This “bauhaus” solution, where form follows function, consists in reconstructing a limited version of the status of subject according to the needs of a particular situation (similar to the status of the unborn child)… Will this promising and pragmatic legal strategy be able to evolve at the speed of its moving target?

If the United States has GAFA, China has big data, Germany has Industry 4.0, Japan has Society 5.0 and France has Cédric Villani, we could all benefit from more international cooperation and collaboration in multilateral events such as this one.

More information on the AI symposium organized by DWIH